The number 0 represents an empty quantity and acts as the additive identity across various number systems, meaning adding 0 to any number does not change its value. Crucially, any number multiplied by 0 results in 0. Division by 0 is undefined in standard arithmetic due to the properties of multiplication and the role of 0 in number systems.

January 1904: Classic Mac OS and Palm OS epoch

The Classic Mac OS epoch and Palm OS epoch began on the midnight before the first of January 1904, representing the date and time associated with a zero timestamp in computing.

1907: Pronunciation of "0" in years

In 1907, the digit 0 was often pronounced as "oh" when reading out the year, as in "nineteen oh seven."

January 1970: Unix epoch

The Unix epoch began on the midnight before the first of January 1970, representing the date and time associated with a zero timestamp in computing.

Trending

15 minutes ago Tom Emmer, Minnesota Immigration, Operation Metro Surge: Praise and Fears in Twin Cities

15 minutes ago DiZoglio faces Audit Obstacles: Judge and AG resist, legislature involved in Massachusetts fight.

15 minutes ago Similarities and Differences: Trump vs. Washington, Importance of Washington's Farewell Address Today

16 minutes ago Amber Ruffin & Grey Henson discuss new musical 'Bigfoot' before Off-Broadway premiere.

16 minutes ago Stephanie Pratt Publicly Criticizes Brother Spencer's LA Mayoral Campaign, Calls it 'Stupidity'

1 hour ago Joe Hendry shines in NXT, eyes dream match with Drew McIntyre in Scotland.

Popular

Kid Rock born Robert James Ritchie is an American musician...

Randall Adam Fine is an American politician a Republican who...

Pam Bondi is an American attorney lobbyist and politician currently...

Barack Obama the th U S President - was the...

The Winter Olympic Games a major international multi-sport event held...

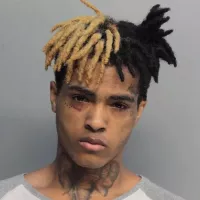

XXXTentacion born Jahseh Dwayne Ricardo Onfroy was a controversial yet...