ChatGPT, developed by OpenAI and launched in 2022, is a generative AI chatbot based on the GPT-4o large language model. It generates human-like conversational responses, allowing users to refine conversations concerning length, format, style, detail, and language. Credited with accelerating the AI boom, ChatGPT has drawn significant investment and public attention to AI. Concerns exist regarding its potential to displace human intelligence, enable plagiarism, and spread misinformation.

2018: Juvenile Justice System Act Enacted

Under the Juvenile Justice System Act enacted in 2018, according to section 12, the court can grant bail on certain conditions. However, it is up to the court to decide whether or not a 13-year-old suspect will be granted bail after arrest.

2021: ChatGPT Compared to a "Stochastic Parrot"

In 2021, writers for The Verge cited the research paper "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜" by Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell, comparing ChatGPT to a "stochastic parrot".

December 2022: ChatGPT Widely Assessed with Powerful Capabilities

In December 2022, ChatGPT was widely assessed as possessing unprecedented and powerful capabilities, with notable figures like Kevin Roose of The New York Times hailing it as the best AI chatbot released to the public.

December 2022: Google Executives Sound "Code Red" Alarm Over ChatGPT

In December 2022, Google executives sounded a "code red" alarm, fearing that ChatGPT's question-answering ability posed a threat to Google Search, Google's core business.

December 2022: Stack Overflow Bans ChatGPT for Generating Answers

In December 2022, Stack Overflow banned the use of ChatGPT for generating answers to questions due to the factually ambiguous nature of its responses.

2022: Data collected from LIU College of Pharmacy's drug information service

From 2022 to 2023, data collected from LIU College of Pharmacy's drug information service was used in a study presented at a conference of the American Society of Health-System Pharmacists in December 2023, which compared ChatGPT's responses to drug information service questions with researched responses from pharmacists.

2022: ChatGPT Used to Write Phishing Emails and Malware

In 2022, Check Point Research and others noted that ChatGPT could write phishing emails and malware, especially when combined with OpenAI Codex.

2022: ChatGPT's Generated Text Comparable to a Good Student's Work

In 2022, technology writer Dan Gillmor used ChatGPT on a student assignment and found its generated text was on par with what a good student would deliver, indicating "academia has some very serious issues to confront".

January 2023: Massachusetts Bill Proposed with ChatGPT's Assistance

In January 2023, Massachusetts State Senator Barry Finegold and State Representative Josh S. Cutler proposed a bill partially written by ChatGPT, "An Act drafted with the help of ChatGPT to regulate generative artificial intelligence models like ChatGPT".

January 2023: MedPage Today notes AI as a useful tool in medicine

In January 2023, MedPage Today noted that researchers have published papers touting AI programs as useful tools in medical education, research, and clinical decision making.

January 2023: Nick Cave Responds to ChatGPT-Authored Song

In January 2023, Nick Cave responded to a song written by ChatGPT in his style, calling it "bullshit" and a "grotesque mockery of what it is to be human."

January 2023: Study Indicates ChatGPT Has a Pro-Environmental, Left-Libertarian Orientation

In January 2023, a study stated that ChatGPT has a pro-environmental, left-libertarian orientation, suggesting potential bias.

January 2023: International Conference on Machine Learning Bans Undocumented Use of ChatGPT

In January 2023, the International Conference on Machine Learning banned any undocumented use of ChatGPT or other large language models to generate text in submitted papers.

February 2023: Time Magazine Features ChatGPT on Cover

In February 2023, Time magazine featured a screenshot of a conversation with ChatGPT on its cover, highlighting "The AI Arms Race" and expressing concerns.

February 2023: Papers Evaluate ChatGPT's Proficiency in Medicine

In February 2023, two separate papers were published that evaluated ChatGPT's proficiency in medicine using the USMLE. Findings were published in JMIR Medical Education and PLOS Digital Health.

March 2023: Pew Research Center Poll Finds 14% of American Adults Tried ChatGPT

A March 2023 Pew Research Center poll found that 14% of American adults had tried ChatGPT.

March 2023: USMLE performance of GPT-3.5 and GPT-4 found to decline

From March 2023 to June 2023, researchers at Stanford University and the University of California, Berkeley have found that the performance of GPT-3.5 and GPT-4 on the USMLE declined.

March 2023: ChatGPT Responses Analyzed for Correctness on Stack Overflow

In March 2023, Researchers at Purdue University analyzed ChatGPT's responses to questions about software engineering and computer programming, finding that 52% of them contained inaccuracies and 77% were verbose.

March 2023: ChatGPT's Application in Clinical Toxicology Tested

In March 2023, a paper tested ChatGPT's application in clinical toxicology and found that the AI "fared well" in answering a "very straightforward clinical case example".

March 2023: Open Letter Calls for Pause of Giant AI Experiments

In March 2023, more than 20,000 signatories, including prominent scientists and tech founders like Yoshua Bengio, Elon Musk, and Steve Wozniak, signed an open letter advocating for an immediate pause of giant AI experiments such as ChatGPT, due to potential risks to society.

March 2023: Italian Data Protection Authority Bans ChatGPT in Italy

In late March 2023, the Italian data protection authority banned ChatGPT in Italy and opened an investigation, citing concerns over age-inappropriate content and data privacy.

March 2023: ChatGPT Blocked in China

On March 2, 2023, ChatGPT was blocked by the Great Firewall in China, which was characterized by Chinese state media as a way for the United States to spread misinformation.

April 11, 2023: Judge in Pakistan Uses ChatGPT to Decide Bail

On April 11, 2023, a session court judge in Pakistan used ChatGPT to decide the bail of a 13-year-old accused in a matter.

April 2023: Mayor Brian Hood Plans Legal Action Against ChatGPT Over False Information

In April 2023, Brian Hood, mayor of Hepburn Shire Council, planned to take legal action against ChatGPT over false information, which erroneously claimed he was jailed for bribery.

April 2023: Il Foglio Publishes ChatGPT-Generated Articles

In April 2023, Italian newspaper Il Foglio published one ChatGPT-generated article a day on its website, hosting a special contest for its readers. The articles covered topics ranging from replacement of journalists by AI, to Elon Musk's management of Twitter, the Meloni government's immigration policy, and the competition between chatbots and virtual assistants.

April 2023: Study Assesses ChatGPT's Breast Cancer Screening Advice

In April 2023, a Radiology study tested the AI's ability to answer queries about breast cancer screening. The authors found that it answered appropriately "about 88 percent of the time", however, in one case (for example), it gave advice that had become outdated about a year earlier.

May 2023: Man Arrested in China for Using ChatGPT to Generate Bogus Report

In May 2023, Chinese police arrested a man who allegedly used ChatGPT to generate a bogus report about a train crash and posted it online for profit.

May 2023: Geoffrey Hinton Leaves Google, AI Scientists Demand Action on Extinction Risk

In May 2023, Geoffrey Hinton, one of the "fathers of AI", left Google, expressing concerns about future AI systems surpassing human intelligence. Additionally, a statement by hundreds of AI scientists and leaders demanded prioritizing the mitigation of extinction risks from AI.

May 2023: Samsung Bans Generative AI Company-Wide After Sensitive Material Uploaded to ChatGPT

In May 2023, Samsung banned generative AI company-wide after sensitive material was uploaded to ChatGPT, raising data security concerns.

May 2023: Attorneys Sanctioned for Using ChatGPT-Generated Fictitious Legal Cases

In May 2023, in Mata v. Avianca, Inc., a personal injury lawsuit, the plaintiff's attorneys reportedly used ChatGPT to generate a legal motion. ChatGPT generated numerous fictitious legal cases involving fictitious airlines with fabricated quotations and internal citations in the legal motion. The attorneys faced judicial sanction and were fined $5,000.

June 2023: USMLE performance of GPT-3.5 and GPT-4 found to decline

From March 2023 to June 2023, researchers at Stanford University and the University of California, Berkeley have found that the performance of GPT-3.5 and GPT-4 on the USMLE declined.

June 2023: "ChatGPT-powered church service" held in Germany

In June 2023, a "ChatGPT-powered church service" was held at St. Paul's church in Fürth, Germany. Theologian and philosopher Jonas Simmerlein, who presided, said that it was "about 98 percent from the machine". Reactions to the ceremony were mixed.

June 2023: Writers Sue OpenAI for Copyright Infringement

In June 2023, two writers sued OpenAI, claiming the company's training data came from illegal websites that show copyrighted books, leading to copyright infringement concerns.

June 2023: Performance of GPT Models on Code Generation Problems Declines

Researchers at Stanford University and the University of California, Berkeley, found that in June 2023, the performance of GPT-3.5 and GPT-4 fell significantly compared to March 2023 when creating directly executable responses to code generation problems from LeetCode.

July 2023: Sarah Silverman, Christopher Golden, and Richard Kadrey sue OpenAI and Meta for copyright infringement

In July 2023, Comedian and author Sarah Silverman, Christopher Golden, and Richard Kadrey sued OpenAI and Meta for copyright infringement.

July 2023: FTC Launches Investigation into OpenAI

In July 2023, the FTC launched an investigation into OpenAI, the creator of ChatGPT, over allegations that the company scraped public data and published false and defamatory information.

July 2023: FTC Investigates OpenAI Over ChatGPT Data Security and Privacy Practices

In July 2023, the US Federal Trade Commission (FTC) issued a civil investigative demand to OpenAI to investigate whether the company's data security and privacy practices to develop ChatGPT were unfair or harmed consumers in violation of Section 5 of the Federal Trade Commission Act of 1914.

August 2023: Paper Finds Political Bias in ChatGPT

In August 2023, a paper found a "significant and systematic political bias toward the Democrats in the US, Lula in Brazil, and the Labour Party in the UK" in ChatGPT. OpenAI acknowledged plans to allow ChatGPT to create "outputs that other people (ourselves included) may strongly disagree with".

September 2023: Authors Guild Files Copyright Infringement Complaint

In September 2023, the Authors Guild, representing 17 authors including George R. R. Martin, filed a copyright infringement complaint against OpenAI, alleging that OpenAI illegally copied copyrighted works to train ChatGPT.

October 2023: Porto Alegre Approves Ordinance Written by ChatGPT

In October 2023, the council of Porto Alegre, Brazil, unanimously approved a local ordinance proposed by councilman Ramiro Rosário that would exempt residents from needing to pay for the replacement of stolen water consumption meters.

November 2023: Patronus AI Research Compares Performance of Generative AI Models on Financial Statement Analysis

In November 2023, Patronus AI compared the performance of GPT-4, GPT-4-Turbo, Claude 2, and LLaMA-2 on a 150-question test about information in financial statements. The research indicated that GPT-4-Turbo and LLaMA-2 struggled with retrieval-based question answering, while GPT-4-Turbo and Claude-2 showed limitations in long context window scenarios.

December 2023: ChatGPT Included in Nature's 10 List

In December 2023, ChatGPT became the first non-human to be included in Nature's 10, an annual listicle curated by Nature of people considered to have made significant impact in science. It was also noted that it "broke the Turing test".

December 2023: Four Arrested in China for Allegedly Using ChatGPT to Develop Ransomware

In December 2023, Chinese police arrested four people who had allegedly used ChatGPT to develop ransomware.

December 2023: The New York Times Sues OpenAI and Microsoft for Copyright Infringement

In December 2023, The New York Times initiated a lawsuit against OpenAI and Microsoft, claiming copyright infringement due to ChatGPT and Microsoft Copilot's ability to reproduce Times' articles without authorization. The Times requested to prevent the use of their content for AI training and its removal from existing datasets.

December 2023: Litigant Cites Hallucinated Cases in Tax Appeal

In December 2023, a self-representing litigant in a tax case before the First-tier Tribunal in the United Kingdom cited a series of hallucinated cases purporting to support her argument, leading to wasted time and public money.

December 2023: Study Compares ChatGPT to Pharmacist Responses

In December 2023, research presented at a conference of the American Society of Health-System Pharmacists compared ChatGPT's responses to drug information service questions with researched responses from pharmacists, finding a high failure rate and fabricated citations in ChatGPT's answers.

2023: Increase in Malicious Phishing Emails Attributed to Generative AI Use

From the launch of ChatGPT in the fourth quarter of 2022 to the fourth quarter of 2023, there was a substantial increase in malicious phishing emails and credential phishing, which cybersecurity professionals attributed to increased use of generative artificial intelligence, including ChatGPT.

2023: Australian MP Warns of AI's Potential for 'Mass Destruction'

In 2023, Australian MP Julian Hill warned the national parliament that the growth of AI could cause "mass destruction", citing risks like cheating, job losses, discrimination, disinformation, and military applications during his speech.

2023: Assessment of ChatGPT Citations and Course Material Generation

In 2023, Geography professor Terence Day assessed citations generated by ChatGPT and found them to be fake. He also found that it is possible to generate high-quality introductory college courses using ChatGPT. Furthermore, ChatGPT was seen as an opportunity for cheap and individualized tutoring, leading to the creation of specialized chatbots like Khanmigo.

2023: Research Papers Written by ChatGPT Lead to Concerns in Academia

In 2023, Spanish chemist Rafael Luque admitted that a plethora of his research papers were written by ChatGPT, raising concerns about the use of ChatGPT in academia due to its tendency to hallucinate and produce inaccurate citations.

2023: Research Reveals Cyberattack Vulnerabilities of ChatGPT

Research conducted in 2023 revealed weaknesses of ChatGPT that make it vulnerable to cyberattacks, including jailbreaks, reverse psychology, social engineering, and phishing attacks. However, the technology can also improve security by cyber defense automation, threat intelligence, attack identification, and reporting.

January 2024: Study Finds Low Accuracy Rate for GPT-4 in Diagnosing Pediatric Medical Cases

In January 2024, a study conducted by researchers at Cohen Children's Medical Center found that GPT-4 had an accuracy rate of 17% when diagnosing pediatric medical cases.

February 2024: Most claims dismissed in copyright lawsuit against OpenAI and Meta

In February 2024, most of the claims in the copyright lawsuit against OpenAI and Meta were dismissed, except the "unfair competition" claim, which was allowed to proceed.

March 2024: Patronus AI LLM Performance Comparison

In March 2024, Patronus AI conducted a test comparing LLM performance, and found that GPT-4, Mistral AI's Mixtral, Meta AI's LLaMA-2, and Anthropic's Claude 2 reproduced copyrighted sentences from books verbatim in a significant percentage of responses when prompted.

May 7, 2024: OpenAI Announces Tamper-Resistant Watermarking

On May 7, 2024, OpenAI announced the development of tamper-resistant watermarking tools for identifying AI-generated content in a blog post.

May 2024: OpenAI Removes Accounts Involved in State-Backed Influence Operations

In May 2024, OpenAI removed accounts involving the use of ChatGPT by state-backed influence operations such as China's Spamouflage, Russia's Doppelganger, and Israel's Ministry of Diaspora Affairs and Combating Antisemitism.

July 2024: GPT-4o in ChatGPT Links to Scam News Sites

In July 2024, Futurism reported that GPT-4o in ChatGPT would sometimes link to "scam news sites that deluge the user with fake software updates and virus warnings", potentially leading users to download malware.

August 2024: FTC Bans Fake User Reviews Created by Generative AI Chatbots

In August 2024, the FTC voted unanimously to ban marketers from using fake user reviews created by generative AI chatbots (including ChatGPT) and influencers paying for bots to increase follower counts.

November 2024: Study Reports Higher Diagnostic Accuracy with GPT-4 for Illness Diagnosis

In a November 2024 study of 50 physicians on illness diagnosis, GPT-4 achieved a 90% accuracy, while physicians scored 74% without AI assistance, and 76% when using the chatbot.

2024: Survey Finds Chinese Youth Using Generative AI

In 2024, a survey of Chinese youth found that 18% of respondents born after 2000 reported using generative AI "almost every day" and that ChatGPT is one of the most popular generative AI products in China.

2024: The Last Screenwriter film released

In 2024, the film The Last Screenwriter, created and directed by Peter Luisi, was written with the use of ChatGPT, and was marketed as "the first film written entirely by AI".

February 2025: OpenAI Identifies and Removes Influence Operations Targeting Chinese Dissidents

In February 2025, OpenAI identified and removed influence operations, termed "Peer Review" and "Sponsored Discontent", used to attack overseas Chinese dissidents.

February 2025: Delhi High Court Accepts ANI's Case Against OpenAI

In February 2025, the Delhi High Court accepted ANI's case against OpenAI over concerns that ChatGPT was sharing paywalled content without consent. OpenAI argued the court lacked jurisdiction due to the firm's lack of physical presence in India.

2025: Study Finds Antisemitic Bias in Major LLMs Including ChatGPT

In 2025, a study published by the Anti-Defamation League found that several major LLMs, including ChatGPT, Llama and Claude, showed antisemitic bias.

2025: Las Vegas Truck Explosion Planned with ChatGPT's Help

The Las Vegas Metropolitan Police Department reported that the perpetrator of the 2025 Las Vegas truck explosion used ChatGPT to help plan the incident.

Mentioned in this timeline

Elon Musk is a prominent businessman best known for leading...

Google LLC is a multinational technology corporation specializing in online...

The United States of America is a federal republic of...

Samsung Group is a South Korean multinational manufacturing conglomerate and...

California is the most populous US state located on the...

India officially the Republic of India is a South Asian...

Trending

12 minutes ago Janet Jackson to Receive the ICON Award and Perform at the 2025 AMAs.

13 minutes ago Ethan Hawke's 'The Lowdown' Noir Series Premieres September 23 on FX at 9 PM.

13 minutes ago Theo James: New Face of Dolce & Gabbana Light Blue and Downton Abbey Role

1 hour ago Asia-Pacific markets decline amid US-China trade assessment; Wall Street rally steadies.

21 days ago Kesha reveals secret engagement, announces tour with Scissor Sisters and appears with Kelly Clarkson.

1 hour ago Sarah Silverman Jokes Offended Pamela Anderson; Reveals Bedtime Routine on John Mulaney's Show.

Popular

Jupiter the fifth planet from the Sun is the Solar...

Michael Jordan also known as MJ is an American businessman...

Pope Francis is the current head of the Catholic Church...

Cristiano Ronaldo often nicknamed CR is a highly decorated Portuguese...

Ronald Reagan the th U S President - was a...

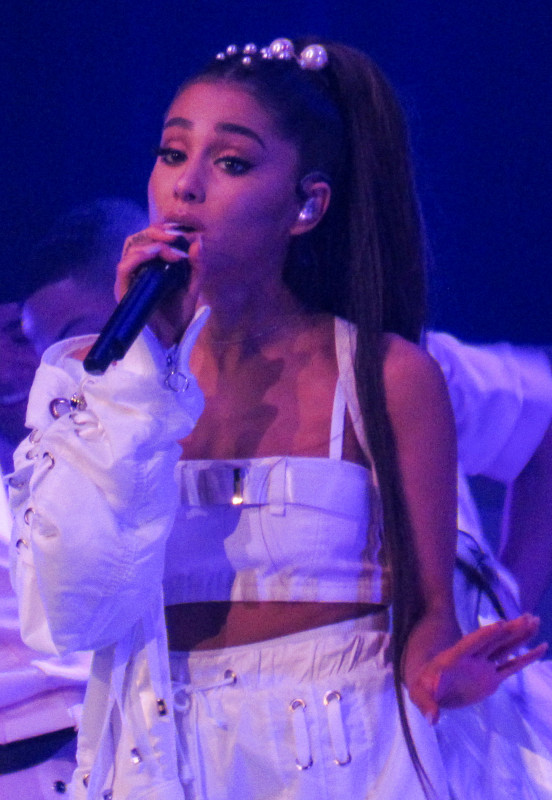

Ariana Grande-Butera is an American singer songwriter and actress recognized...